1. hadoop 生态概况

Hadoop是一个由Apache基金会所开发的分布式系统基础架构。

用户可以在不了解分布式底层细节的情况下,开发分布式程序。充分利用集群的威力进行高速运算和存储。

具有可靠、高效、可伸缩的特点。

Hadoop的核心是YARN,HDFS和Mapreduce

下图是hadoop生态系统,集成spark生态圈。在未来一段时间内,hadoop将于spark共存,hadoop与spark

都能部署在yarn、mesos的资源管理系统之上

在linux上安装步骤如下

1、关闭防火墙

#chkconfig iptables --list

#chkconfig iptables off

#service iptables status

#service iptables stop

2、配置IP地址

#cd /etc/sysconfig/network-scripts/

桥接模式

DEVICE=eth0

BOOTPROTO=none

HWADDR=00:0c:29:fb:e0:db

IPV6INIT=yes

NM_CONTROLLED=yes

ONBOOT=yes

IPADDR=192.168.0.10

TYPE=Ethernet

UUID="b6fd9228-061a-432d-80fe-f3597954261b"

NETMASK=255.255.255.0

DNS2=180.168.255.118

GATEWAY=192.168.0.1

DNS1=116.228.111.18

USERCTL=no

ARPCHECK=no

3、修改主机名

更改/etc下的hosts文件,在提示符下输入vi /etc/hosts,然后将localhost.localdomain改为想要设置的主机名

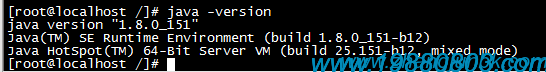

4、安装JKD1.8.151

在/etc/profile文件中写入

export PATH

JAVA_HOME=/zhangjun/programe/jdk1.8.0_151

PATH=$JAVA_HOME/bin:$PATH

CLASSPATH=.:$JAVA_HOME/lib:$JAVA_HOME/jre/lib:$CLASSPATH

export PATH

export CLASSPATH

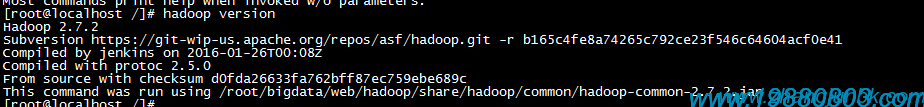

5、安装hadoop3.0.0

在/etc/profile文件中写入

export HADOOP_HOME=/zhangjun/programe/hadoop-3.0.0

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

6、使配置文件生效

#source /etc/profile

7、测试版本

8、重要目录说明

Hadoop安装包目录下有几个比较重要的目录

sbin : 启动或停止Hadoop相关服务的脚本

bin :对Hadoop相关服务(HDFS,YARN)进行操作的脚本

etc : Hadoop的配置文件目录

share :Hadoop的依赖jar包和文档,文档可以被删掉

lib :Hadoop的本地库(对数据进行压缩解压缩功能的)

9、配置hadoop

修改主机名

#cp /etc/sysconfig/network /etc/sysconfig/network.20171218.bak

#vi /etc/sysconfig/network

NETWORKING=yes

#HOSTNAME=localhost.localdomain

HOSTNAME=hadoop

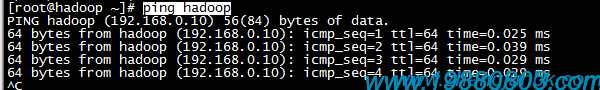

绑定主机名对应的IP地址

# cp /etc/hosts /etc/hosts.20171218.bak

#vi /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.0.10 hadoop

测试主机名

# ping hadoop

创建用户组hadoop

#groupadd hadoop

创建用户hadoop并添加进用户组hadoop

#useradd -g hadoop -d /home/hadoop -s /bin/bash -m hadoop

其含义如下:

-d, --home HOME_DIR

-g, --gid GROUP

-s, --shell SHELL

-m, --create-home

添加visudo权限

#visudo

其中

visudo <=> vi /etc/sudoers

配置ssh免密码登录

1) 验证是否安装ssh:ssh -version

显示如下的话则成功安装了

OpenSSH_6.2p2 Ubuntu-6ubuntu0.1, OpenSSL 1.0.1e 11 Feb 2013

Bad escape character 'rsion'.

否则安装ssh:sudo apt-get install ssh

2)ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa

解释一下,ssh-keygen代表生成密钥;-t(注意区分大小写)表示指定生成的密钥类型;dsa是dsa密钥认证的意思,即密钥类型;-P用 于提供密语;-f指定生成的密钥文件。(关于密钥密语的相关知识这里就不详细介绍了,里面会涉及SSH的一些知识,如果读者有兴趣,可以自行查阅资料。)

.ssh表示ssh文件是隐藏的。

在Ubuntu中,~代表当前用户文件夹,这里即/home/u。

这个命令会在.ssh文件夹下创建两个文件id_dsa及id_dsa.pub,这是SSH的一对私钥和公钥,类似于钥匙及锁,把id_dsa.pub(公钥)追加到授权的key里面去。

3)cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

这段话的意思是把公钥加到用于认证的公钥文件中,这里的authorized_keys是用于认证的公钥文件。

至此无密码登录本机已设置完毕。

4)现在可以登入ssh确认以后登录时不用输入密码

#~$ ssh localhost

# ~$ exit

这样以后登录就不用输入密码了。

5)scp <本地文件名> <用户名>@<ssh服务器地址>:<上传保存路径即文件名>

由于使用ssh,我们无法使用简单的拖拽或者复制粘贴操作对本地计算机和ssh服务器上的文件进行交流。我们需要用到scp命令行。

举例以说明。如果希望将当前目录下的a.txt文件上传到ssh服务器上test文件夹并改名为b.txt,其中ssh服务器网址为127.0.0.1,用户名admin。代码如下:

程序代码

scp a.txt admin@127.0.0.1:./test/b.txt

下载也很简单,只需要将“本地文件名”和后面服务器的信息对调即可。

scp hadoop-1.0.3.tar.gz hadoop02@10.130.26.18:~/

10、配置(本地单独模式)standalone mode

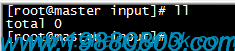

10.1、在Hadoop的安装目录下,创建input目录

#cd

#mkdir -p input

10.2、修改hadoop中hadoop-env.sh的JAVA_HOME路径

原内容

export JAVA_HOME=${JAVA_HOME}

修改内容

export JAVA_HOME=/home/hadoop/soft/jdk1.8.0_151

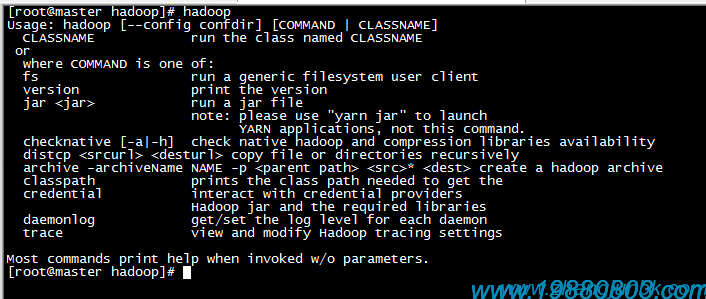

10.3、测试是否安装成功hadoop

#hadoop

说明已经成功安装

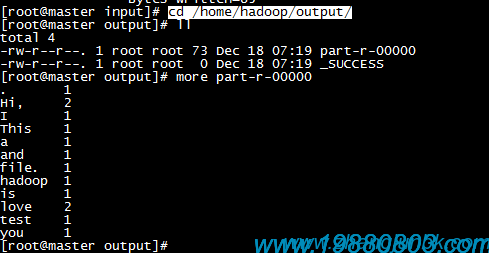

10.4、单词统计

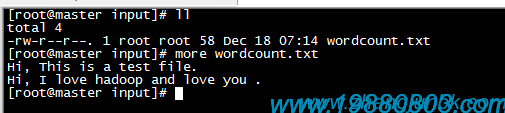

创建输入文件夹input放输入文件

# /home/hadoop/input/

#vim wordcount.txt

Hi, This is a test file.

Hi, I love hadoop and love you .

运行单词统计

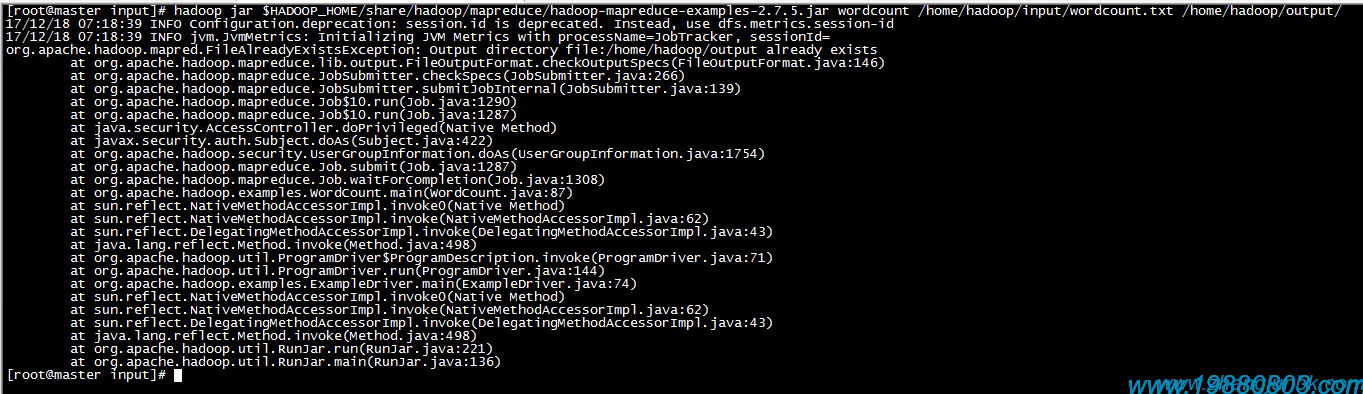

# hadoop jar $HADOOP_HOME/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.5.jar wordcount /home/hadoop/input/wordcount.txt /home/hadoop/output/

删除output

#rm -rf /home/hadoop/output/

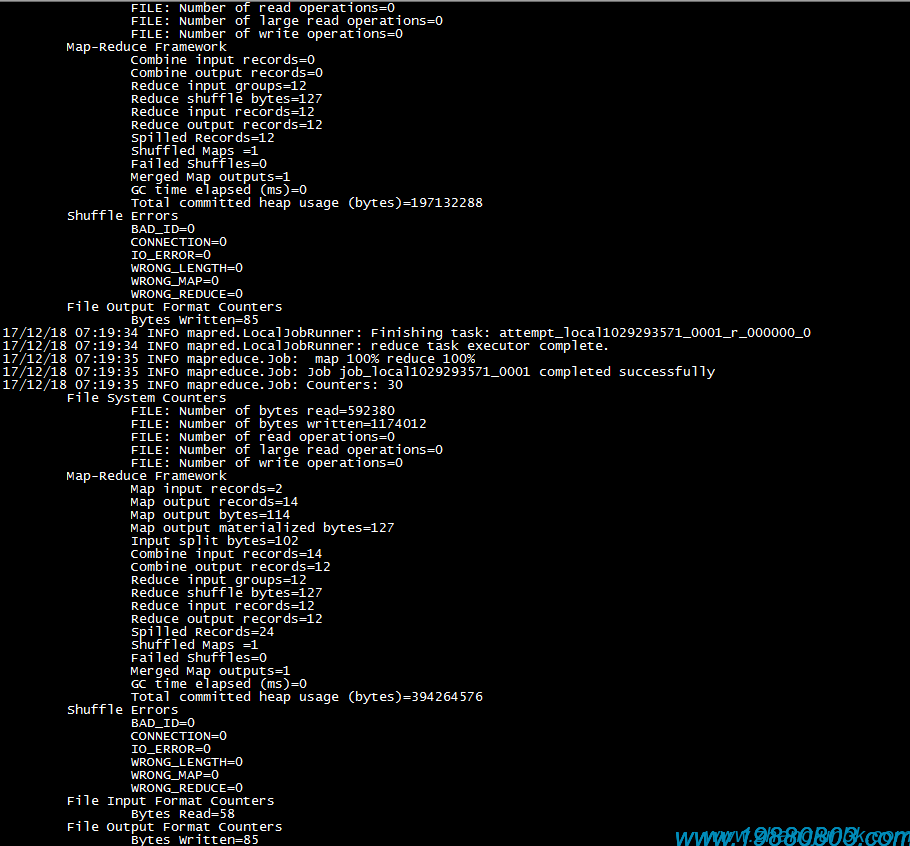

再次运行

查看输出文件

# cd /home/hadoop/output/

#ll

#more part-r-00000

10.5、备份单机模式文件路径

10.6、至此单机安装完成

11、配置(伪分布式模式)pseudo-distributed mode

11.1、说明

Hadoop 可以在单节点上以伪分布式的方式运行,Hadoop 进程以分离的 Java 进程来运行,节点既作为 NameNode 也作为 DataNode,同时,读取的是 HDFS 中的文件。

Hadoop 的配置文件位于 /$HADOOP_HOME/etc/hadoop/ 中,伪分布式至少需要修改2个配置文件 core-site.xml 和 hdfs-site.xml 。

Hadoop的配置文件是 xml 格式,每个配置以声明 property 的 name 和 value 的方式来实现。

11.2、关闭防火墙

#chkconfig iptables --list

#chkconfig iptables off

#service iptables status

#service iptables stop

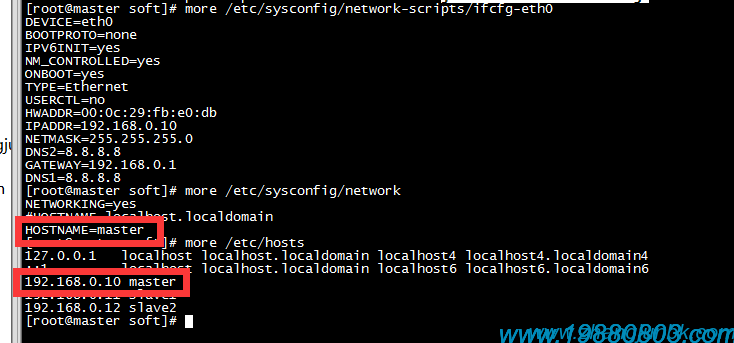

11.3、修改IP地址及hostname

参考系统管理文档

DEVICE=eth0

BOOTPROTO=none

IPV6INIT=yes

NM_CONTROLLED=yes

ONBOOT=yes

TYPE=Ethernet

USERCTL=no

HWADDR=00:0c:29:fb:e0:db

IPADDR=192.168.0.10

NETMASK=255.255.255.0

DNS2=8.8.8.8

GATEWAY=192.168.0.1

DNS1=8.8.8.8

只修改标红的地方

[root@master soft]# more /etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE=eth0

BOOTPROTO=none

IPV6INIT=yes

NM_CONTROLLED=yes

ONBOOT=yes

TYPE=Ethernet

USERCTL=no

HWADDR=00:0c:29:fb:e0:db

IPADDR=192.168.0.10

NETMASK=255.255.255.0

DNS2=8.8.8.8

GATEWAY=192.168.0.1

DNS1=8.8.8.8

[root@master soft]# more /etc/sysconfig/network

NETWORKING=yes

#HOSTNAME=localhost.localdomain

HOSTNAME=master

[root@master soft]# more /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.0.10 master

[root@master soft]#

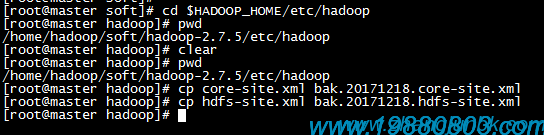

11.4、修改hadoop配置文件

Hadoop 的配置文件位于 /$HADOOP_HOME/etc/hadoop/ 中,伪分布式至少需要修改2个配置文件 core-site.xml 和 hdfs-site.xml 。

Hadoop的配置文件是 xml 格式,每个配置以声明 property 的 name 和 value 的方式来实现。

11.4.1、备份文件

[root@master hadoop]# cd $HADOOP_HOME/etc/hadoop

[root@master hadoop]# pwd

/home/hadoop/soft/hadoop-2.7.5/etc/hadoop

备份文件

[root@master hadoop]# cp core-site.xml bak.20171218.core-site.xml

[root@master hadoop]# cp hdfs-site.xml bak.20171218.hdfs-site.xml

[root@master hadoop]# cp yarn-site.xml bak.20171218.yarn-site.xml

[root@master hadoop]# cp yarn-env.sh bak.20171218.yarn-env.sh

复制文件

[root@master hadoop]# cp mapred-site.xml.template mapred-site.xml

11.5、修改hadoop中hadoop-env.sh的JAVA_HOME路径

原内容

export JAVA_HOME=${JAVA_HOME}

修改内容

export JAVA_HOME=/home/hadoop/soft/jdk1.8.0_151

11.6、修改hadoop中yarn-env.sh的JAVA_HOME路径

原内容

export JAVA_HOME=${JAVA_HOME}

修改内容

export JAVA_HOME=/home/hadoop/soft/jdk1.8.0_151

11.6.1、修改core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://master:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>file:///home/hadoop/tmp</value>

</property>

</configuration>

11.6.2、修改hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:///home/hadoop/hdfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:///home/hadoop/hdfs/data</value>

</property>

</configuration>

伪分布式虽然只需要配置 fs.defaultFS 和 dfs.replication 就可以运行(官方教程如此),不过若没有配置 hadoop.tmp.dir 参数,则默认使用的临时目录为 /tmp/hadoo-hadoop,而这个目录在重启时有可能被系统清理掉,导致必须重新执行 format 才行。所以我们进行了设置,同时也指定 dfs.namenode.name.dir 和 dfs.datanode.data.dir,否则在接下来的步骤中可能会出错。

11.6.3、修改mapred-site.xml

文件默认不存在,只有一个模板,复制一份

# cp mapred-site.xml.template mapred-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>master:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>master:19888</value>

</property>

</configuration>

11.6.4、修改yarn-site.xml

<?xml version="1.0"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>master:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>master:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>master:8035</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>master:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>master:8088</value>

</property>

</configuration>

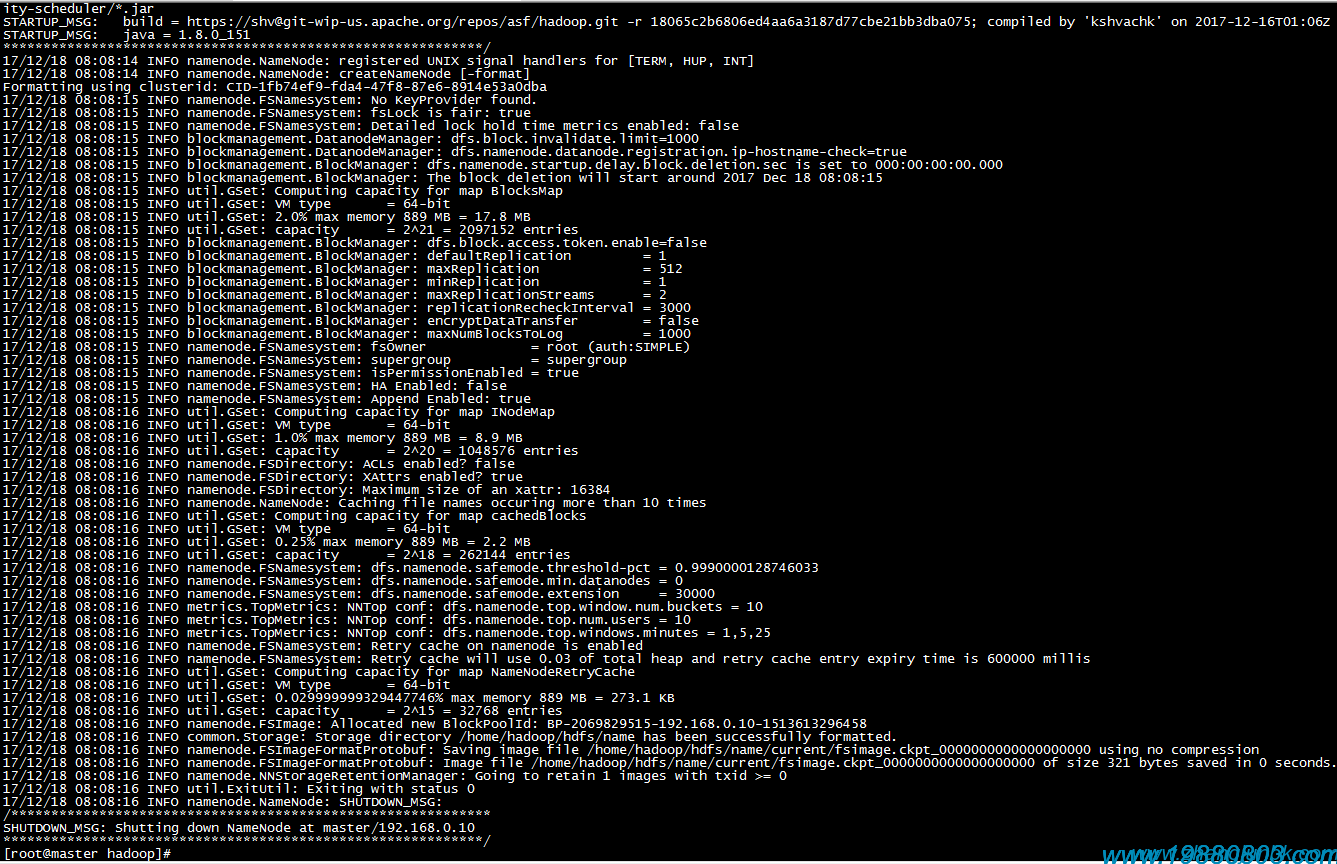

11.7、格式化namenode

# hdfs namenode -format

11.8、jps查看进程

#jps

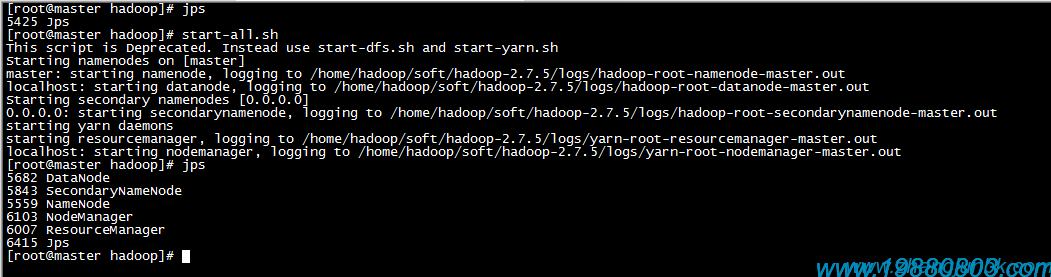

11.9、启动服务

#start-all.sh

[root@master hadoop]# jps

5425 Jps

[root@master hadoop]# start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

Starting namenodes on [master]

master: starting namenode, logging to /home/hadoop/soft/hadoop-2.7.5/logs/hadoop-root-namenode-master.out

localhost: starting datanode, logging to /home/hadoop/soft/hadoop-2.7.5/logs/hadoop-root-datanode-master.out

Starting secondary namenodes [0.0.0.0]

0.0.0.0: starting secondarynamenode, logging to /home/hadoop/soft/hadoop-2.7.5/logs/hadoop-root-secondarynamenode-master.out

starting yarn daemons

starting resourcemanager, logging to /home/hadoop/soft/hadoop-2.7.5/logs/yarn-root-resourcemanager-master.out

localhost: starting nodemanager, logging to /home/hadoop/soft/hadoop-2.7.5/logs/yarn-root-nodemanager-master.out

[root@master hadoop]# jps

5682 DataNode

5843 SecondaryNameNode

5559 NameNode

6103 NodeManager

6007 ResourceManager

6415 Jps

[root@master hadoop]#

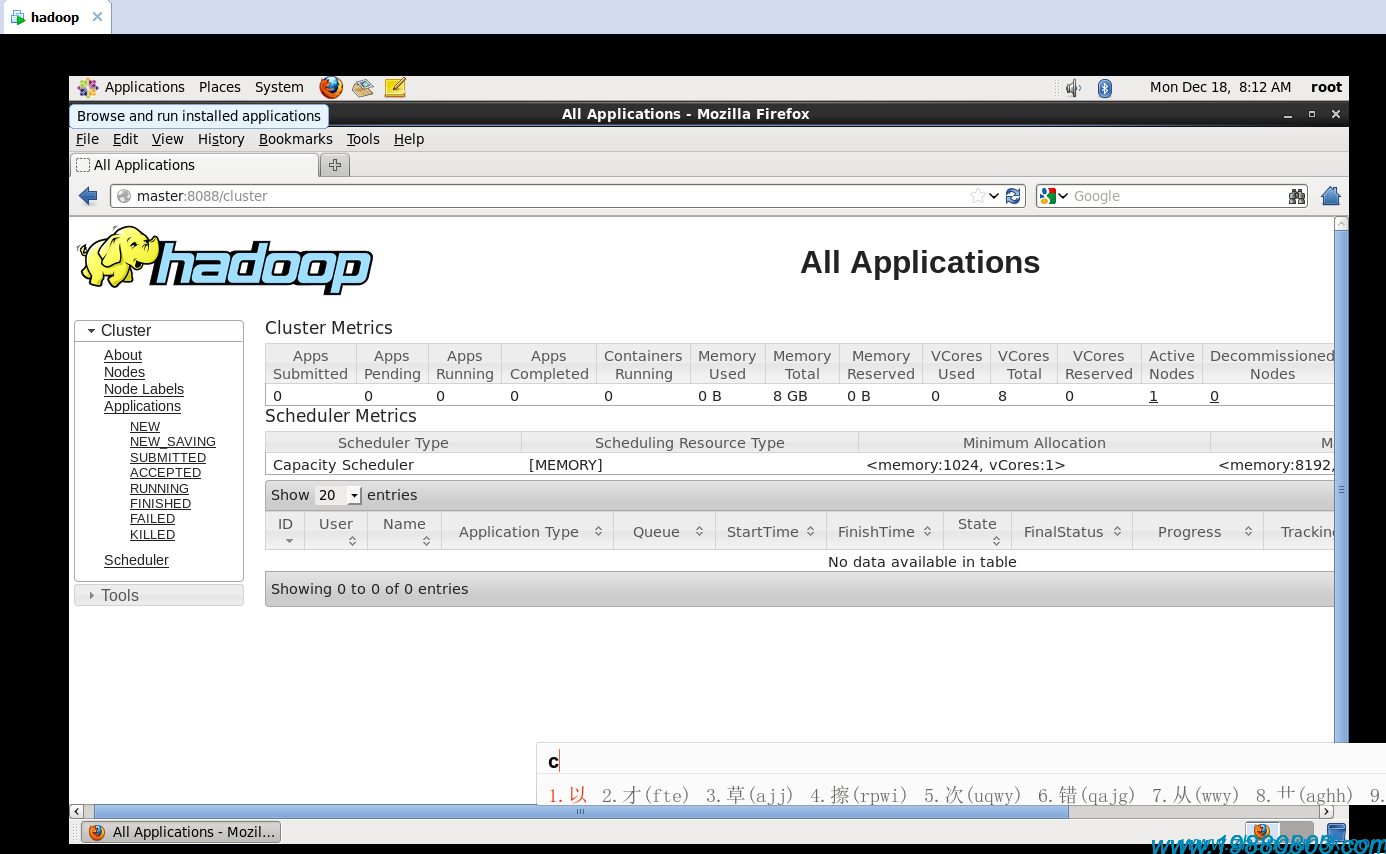

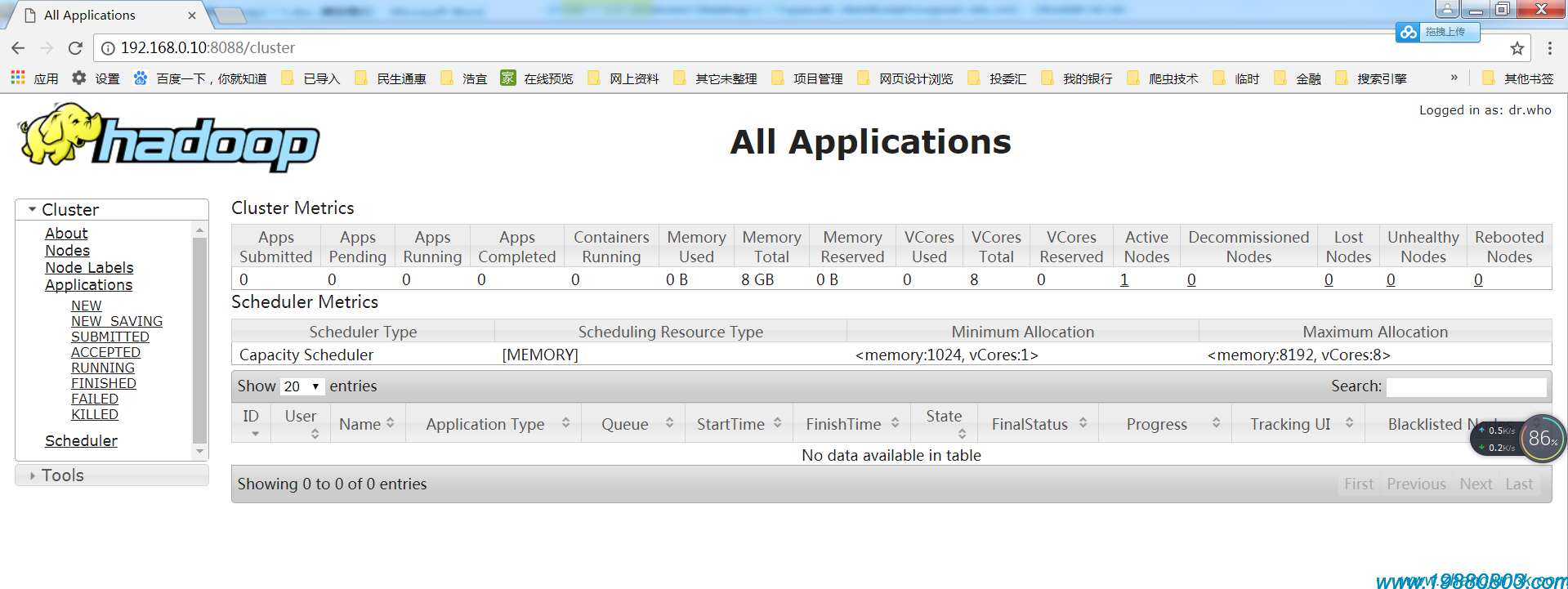

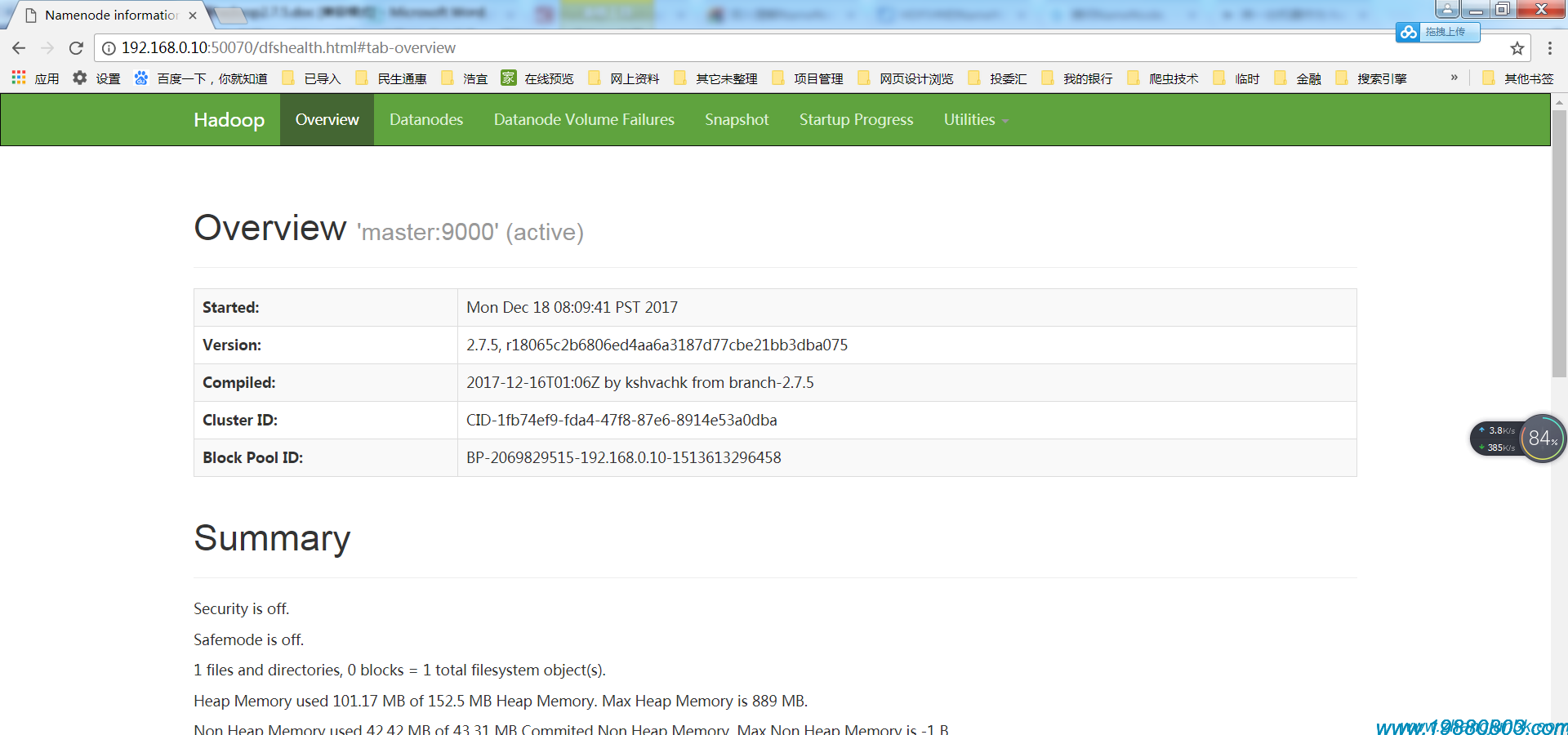

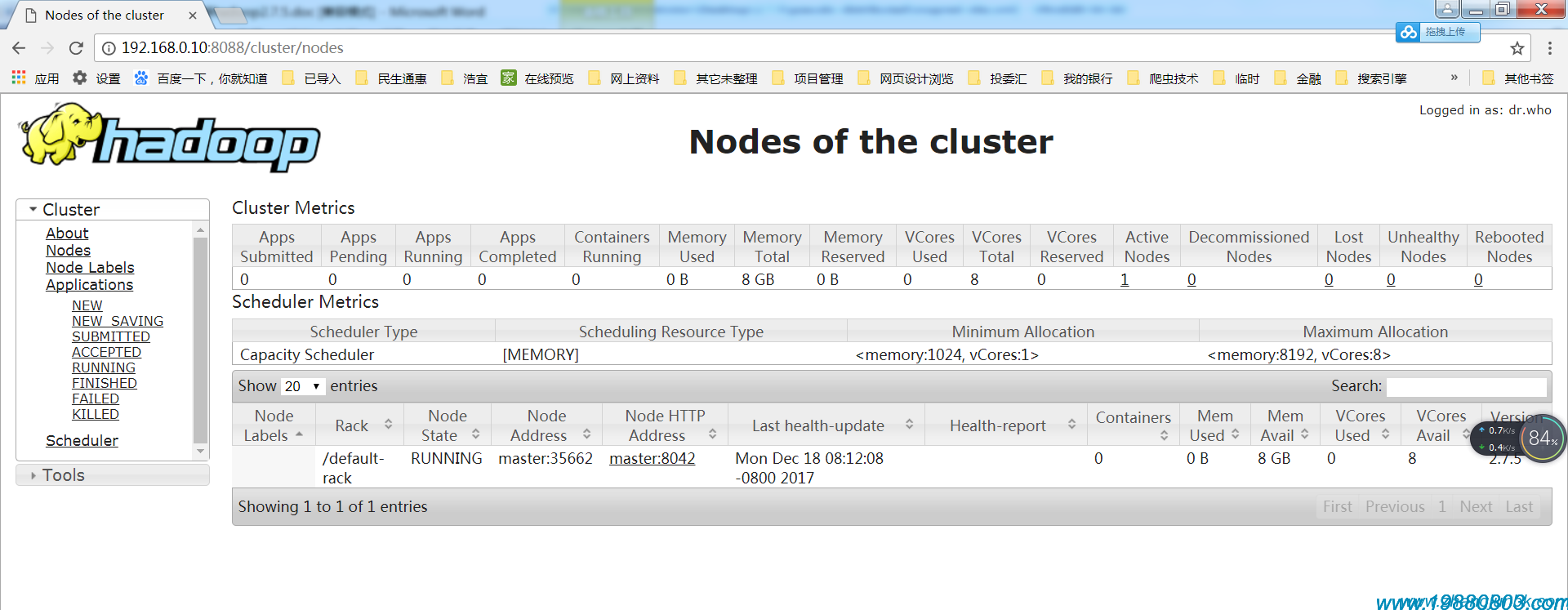

http://master:8088

http://192.168.0.10:8088

MapReduce的Web界面

http://master:50030

HDFS的Web界面

本文为张军原创文章,转载无需和我联系,但请注明来自张军的军军小站,个人博客http://www.zhangjunbk.com